Παρασκευή 4 Νοεμβρίου 2016

TERENCE TAO's 246A, Notes 0: the complex numbers | What's new

246A, Notes 0: the complex numbers | What's new

Kronecker is famously reported to have said, “God created the natural

numbers; all else is the work of man”. The truth of this statement

(literal or otherwise) is debatable; but one can certainly view the

other standard number systems as (iterated) completions of the natural numbers

as (iterated) completions of the natural numbers  in various senses. For instance:

in various senses. For instance:

from scratch as the metric completion of the rationals

from scratch as the metric completion of the rationals  , because the definition of a metric space itself requires the notion of the reals! (One can of course construct

, because the definition of a metric space itself requires the notion of the reals! (One can of course construct  by other means, for instance by using Dedekind cuts or by using uniform spaces

by other means, for instance by using Dedekind cuts or by using uniform spaces

in place of metric spaces.) The definition of the complex numbers as

the algebraic completion of the reals does not suffer from such a

non-circularity issue, but a certain amount of field theory is required

to work with this definition initially. For the purposes of quickly

constructing the complex numbers, it is thus more traditional to first

define as a quadratic extension of the reals

as a quadratic extension of the reals  , and more precisely as the extension

, and more precisely as the extension  formed by adjoining a square root

formed by adjoining a square root  of

of  to the reals, that is to say a solution to the equation

to the reals, that is to say a solution to the equation  . It is not immediately obvious that this extension is in fact algebraically closed; this is the content of the famous fundamental theorem of algebra, which we will prove later in this course.

. It is not immediately obvious that this extension is in fact algebraically closed; this is the content of the famous fundamental theorem of algebra, which we will prove later in this course.

The two equivalent definitions of

– as the algebraic closure, and as a quadratic extension, of the reals

respectively – each reveal important features of the complex numbers in

applications. Because

is algebraically closed, all polynomials over the complex numbers split

completely, which leads to a good spectral theory for both

finite-dimensional matrices and infinite-dimensional operators; in

particular, one expects to be able to diagonalise most matrices and

operators. Applying this theory to constant coefficient ordinary

differential equations leads to a unified theory of such solutions, in

which real-variable ODE behaviour such as exponential growth or decay,

polynomial growth, and sinusoidal oscillation all become aspects of a

single object, the complex exponential (or more generally, the matrix exponential

(or more generally, the matrix exponential  ).

).

Applying this theory more generally to diagonalise arbitrary

translation-invariant operators over some locally compact abelian group,

one arrives at Fourier analysis,

which is thus most naturally phrased in terms of complex-valued

functions rather than real-valued ones. If one drops the assumption that

the underlying group is abelian, one instead discovers the

representation theory of unitary representations,

which is simpler to study than the real-valued counterpart of

orthogonal representations. For closely related reasons, the theory of

complex Lie groups is simpler than that of real Lie groups.

Meanwhile, the fact that the complex numbers are a quadratic extension

of the reals lets one view the complex numbers geometrically as a

two-dimensional plane over the reals (the Argand plane).

Whereas a point singularity in the real line disconnects that line, a

point singularity in the Argand plane leaves the rest of the plane

connected (although, importantly, the punctured plane is no longer simply connected).

As we shall see, this fact causes singularities in complex analytic

functions to be better behaved than singularities of real analytic

functions, ultimately leading to the powerful residue calculus

for computing complex integrals. Remarkably, this calculus, when

combined with the quintessentially complex-variable technique of contour shifting, can also be used to compute some (though certainly not all) definite integrals of real-valued

functions that would be much more difficult to compute by purely

real-variable methods; this is a prime example of Hadamard’s famous

dictum that “the shortest path between two truths in the real domain

passes through the complex domain”.

Another important geometric feature of the Argand plane is the angle

between two tangent vectors to a point in the plane. As it turns out,

the operation of multiplication by a complex scalar preserves the

magnitude and orientation of such angles; the same fact is true for any

non-degenerate complex analytic mapping, as can be seen by performing a

Taylor expansion to first order. This fact ties the study of complex

mappings closely to that of the conformal geometry

of the plane (and more generally, of two-dimensional surfaces and

domains). In particular, one can use complex analytic maps to

conformally transform one two-dimensional domain to another, leading

among other things to the famous Riemann mapping theorem, and to the classification of Riemann surfaces.

If one Taylor expands complex analytic maps to second order rather than

first order, one discovers a further important property of these maps,

namely that they are harmonic.

This fact makes the class of complex analytic maps extremely rigid and

well behaved analytically; indeed, the entire theory of elliptic PDE now

comes into play, giving useful properties such as elliptic regularity and the maximum principle.

In fact, due to the magic of residue calculus and contour shifting, we

already obtain these properties for maps that are merely complex

differentiable rather than complex analytic, which leads to the striking

fact that complex differentiable functions are automatically analytic

(in contrast to the real-variable case, in which real differentiable

functions can be very far from being analytic).

The geometric structure of the complex numbers (and more generally of

complex manifolds and complex varieties), when combined with the

algebraic closure of the complex numbers, leads to the beautiful subject

of complex algebraic geometry, which motivates the much more

general theory developed in modern algebraic geometry. However, we will

not develop the algebraic geometry aspects of complex analysis here.

Last, but not least, because of the good behaviour of Taylor series in

the complex plane, complex analysis is an excellent setting in which to

manipulate various generating functions, particularly Fourier series (which can be viewed as boundary values of power (or Laurent) series

(which can be viewed as boundary values of power (or Laurent) series  ), as well as Dirichlet series

), as well as Dirichlet series  . The theory of contour integration provides a very useful dictionary between the asymptotic behaviour of the sequence

. The theory of contour integration provides a very useful dictionary between the asymptotic behaviour of the sequence  ,

,

and the complex analytic behaviour of the Dirichlet or Fourier series,

particularly with regard to its poles and other singularities. This

turns out to be a particularly handy dictionary in analytic number theory, for instance relating the distribution of the primes to the Riemann zeta function. Nowadays, many of the analytic number theory results first obtained through complex analysis (such as the prime number theorem)

can also be obtained by more “real-variable” methods; however the

complex-analytic viewpoint is still extremely valuable and illuminating.

We will frequently touch upon many of these connections to other fields

of mathematics in these lecture notes. However, these are mostly side

remarks intended to provide context, and it is certainly possible to

skip most of these tangents and focus purely on the complex analysis

material in these notes if desired.

Note: complex analysis is a very visual subject, and one should draw

plenty of pictures while learning it. I am however not planning to put

too many pictures in these notes, partly as it is somewhat inconvenient

to do so on this blog from a technical perspective, but also because

pictures that one draws on one’s own are likely to be far more useful to

you than pictures that were supplied by someone else.

the course; we are concentrating almost all of the algebraic

preliminaries in this section in order to get them out of the way and

focus subsequently on the analytic aspects of the complex numbers.

Thanks to the laws of high-school algebra, we know that the real numbers are a field:

are a field:

it is endowed with the arithmetic operations of addition, subtraction,

multiplication, and division, as well as the additive identity and multiplicative identity

and multiplicative identity  , that obey the usual laws of algebra (i.e. the field axioms).

, that obey the usual laws of algebra (i.e. the field axioms).

The algebraic structure of the reals does have one drawback though – not

all (non-trivial) polynomials have roots! Most famously, the polynomial

equation has no solutions over the reals, because

has no solutions over the reals, because  is always non-negative, and hence

is always non-negative, and hence  is always strictly positive, whenever

is always strictly positive, whenever  is a real number.

is a real number.

As mentioned in the introduction, one traditional way to define the complex numbers is as the smallest possible extension of the reals

is as the smallest possible extension of the reals  that fixes this one specific problem:

that fixes this one specific problem:

as a given in this course; constructions of the real number system can

of course be found in many real analysis texts, including my own.)

Definition 1 is short, but proposing it as a definition of the complex numbers raises some immediate questions:

We begin with existence. One can construct the complex numbers quite

explicitly and quickly using the Argand plane construction; see Remark 7

below. However, from the perspective of higher mathematics, it is more

natural to view the construction of the complex numbers as a special

case of the more general algebraic construction that can extend any

field by the root

by the root  of an irreducible nonlinear polynomial

of an irreducible nonlinear polynomial ![{P \in k[\mathrm{x}]} {P \in k[\mathrm{x}]}](https://s0.wp.com/latex.php?latex=%7BP+%5Cin+k%5B%5Cmathrm%7Bx%7D%5D%7D&bg=ffffff&fg=000000&s=0) over that field; this produces a field of complex numbers

over that field; this produces a field of complex numbers  when specialising to the case where

when specialising to the case where  and

and  . We will just describe this construction in that special case, leaving the general case as an exercise.

. We will just describe this construction in that special case, leaving the general case as an exercise.

Starting with the real numbers , we can form the space

, we can form the space ![{{\bf R}[\mathrm{x}]} {{\bf R}[\mathrm{x}]}](https://s0.wp.com/latex.php?latex=%7B%7B%5Cbf+R%7D%5B%5Cmathrm%7Bx%7D%5D%7D&bg=ffffff&fg=000000&s=0) of (formal) polynomials

of (formal) polynomials

with real co-efficients

with real co-efficients  and arbitrary non-negative integer

and arbitrary non-negative integer  in one indeterminate variable

in one indeterminate variable  . (A small technical point: we do not view this indeterminate

. (A small technical point: we do not view this indeterminate  as belonging to any particular domain such as

as belonging to any particular domain such as  , so we do not view these polynomials

, so we do not view these polynomials  as functions but merely as formal expressions involving a placeholder symbol

as functions but merely as formal expressions involving a placeholder symbol  (which we have rendered in Roman type to indicate its formal character). In this particular characteristic zero setting of working over the reals, it turns out to be harmless to identify each polynomial

(which we have rendered in Roman type to indicate its formal character). In this particular characteristic zero setting of working over the reals, it turns out to be harmless to identify each polynomial  with the corresponding function

with the corresponding function  formed by interpreting the indeterminate

formed by interpreting the indeterminate

as a real variable; but if one were to generalise this construction to

positive characteristic fields, and particularly finite fields, then one

can run into difficulties if polynomials are not treated formally, due

to the fact that two distinct formal polynomials might agree on all

inputs in a given finite field (e.g. the polynomials and

and  agree for all

agree for all  in the finite field

in the finite field  ). However, this subtlety can be ignored for the purposes of this course.) This space

). However, this subtlety can be ignored for the purposes of this course.) This space ![{{\bf R}[x]} {{\bf R}[x]}](https://s0.wp.com/latex.php?latex=%7B%7B%5Cbf+R%7D%5Bx%5D%7D&bg=ffffff&fg=000000&s=0)

of polynomials has a pretty good algebraic structure, in particular the

usual operations of addition, subtraction, and multiplication on

polynomials, together with the zero polynomial and the unit polynomial

and the unit polynomial  , give

, give ![{{\bf R}[\mathrm{x}]} {{\bf R}[\mathrm{x}]}](https://s0.wp.com/latex.php?latex=%7B%7B%5Cbf+R%7D%5B%5Cmathrm%7Bx%7D%5D%7D&bg=ffffff&fg=000000&s=0) the structure of a (unital) commutative ring. This commutative ring also contains

the structure of a (unital) commutative ring. This commutative ring also contains  as a subring (identifying each real number

as a subring (identifying each real number  with the degree zero polynomial

with the degree zero polynomial  ). The ring

). The ring ![{{\bf R}[\mathrm{x}]} {{\bf R}[\mathrm{x}]}](https://s0.wp.com/latex.php?latex=%7B%7B%5Cbf+R%7D%5B%5Cmathrm%7Bx%7D%5D%7D&bg=ffffff&fg=000000&s=0) is however not a field, because many non-zero elements of

is however not a field, because many non-zero elements of ![{{\bf R}[\mathrm{x}]} {{\bf R}[\mathrm{x}]}](https://s0.wp.com/latex.php?latex=%7B%7B%5Cbf+R%7D%5B%5Cmathrm%7Bx%7D%5D%7D&bg=ffffff&fg=000000&s=0) do not have multiplicative inverses. (In fact, no non-constant polynomial in

do not have multiplicative inverses. (In fact, no non-constant polynomial in ![{{\bf R}[\mathrm{x}]} {{\bf R}[\mathrm{x}]}](https://s0.wp.com/latex.php?latex=%7B%7B%5Cbf+R%7D%5B%5Cmathrm%7Bx%7D%5D%7D&bg=ffffff&fg=000000&s=0) has an inverse in

has an inverse in ![{{\bf R}[\mathrm{x}]} {{\bf R}[\mathrm{x}]}](https://s0.wp.com/latex.php?latex=%7B%7B%5Cbf+R%7D%5B%5Cmathrm%7Bx%7D%5D%7D&bg=ffffff&fg=000000&s=0) , because the product of two non-constant polynomials has a degree that is the sum of the degrees of the factors.)

, because the product of two non-constant polynomials has a degree that is the sum of the degrees of the factors.)

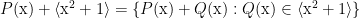

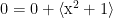

If a unital commutative ring fails to be field, then it will instead possess a number of non-trivial ideals. The only ideal we will need to consider here is the principal ideal

![\displaystyle \langle \mathrm{x}^2+1 \rangle := \{ (\mathrm{x}^2+1) P(\mathrm{x}): P(\mathrm{x}) \in {\bf R}[\mathrm{x}] \}. \displaystyle \langle \mathrm{x}^2+1 \rangle := \{ (\mathrm{x}^2+1) P(\mathrm{x}): P(\mathrm{x}) \in {\bf R}[\mathrm{x}] \}.](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle++%5Clangle+%5Cmathrm%7Bx%7D%5E2%2B1+%5Crangle+%3A%3D+%5C%7B+%28%5Cmathrm%7Bx%7D%5E2%2B1%29+P%28%5Cmathrm%7Bx%7D%29%3A+P%28%5Cmathrm%7Bx%7D%29+%5Cin+%7B%5Cbf+R%7D%5B%5Cmathrm%7Bx%7D%5D+%5C%7D.&bg=ffffff&fg=000000&s=0) This is clearly an ideal of

This is clearly an ideal of ![{{\bf R}[\mathrm{x}]} {{\bf R}[\mathrm{x}]}](https://s0.wp.com/latex.php?latex=%7B%7B%5Cbf+R%7D%5B%5Cmathrm%7Bx%7D%5D%7D&bg=ffffff&fg=000000&s=0) – it is closed under addition and subtraction, and the product of any element of the ideal

– it is closed under addition and subtraction, and the product of any element of the ideal  with an element of the full ring

with an element of the full ring ![{{\bf R}[\mathrm{x}]} {{\bf R}[\mathrm{x}]}](https://s0.wp.com/latex.php?latex=%7B%7B%5Cbf+R%7D%5B%5Cmathrm%7Bx%7D%5D%7D&bg=ffffff&fg=000000&s=0) remains in the ideal

remains in the ideal  .

.

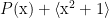

We now define to be the quotient space

to be the quotient space

![\displaystyle {\bf C} := {\bf R}[\mathrm{x}] / \langle \mathrm{x}^2+1 \rangle \displaystyle {\bf C} := {\bf R}[\mathrm{x}] / \langle \mathrm{x}^2+1 \rangle](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+%7B%5Cbf+C%7D+%3A%3D+%7B%5Cbf+R%7D%5B%5Cmathrm%7Bx%7D%5D+%2F+%5Clangle+%5Cmathrm%7Bx%7D%5E2%2B1+%5Crangle&bg=ffffff&fg=000000&s=0) of the commutative ring

of the commutative ring ![{{\bf R}[\mathrm{x}]} {{\bf R}[\mathrm{x}]}](https://s0.wp.com/latex.php?latex=%7B%7B%5Cbf+R%7D%5B%5Cmathrm%7Bx%7D%5D%7D&bg=ffffff&fg=000000&s=0) by the ideal

by the ideal  ; this is the space of cosets

; this is the space of cosets  of

of  in

in ![{{\bf R}[\mathrm{x}]} {{\bf R}[\mathrm{x}]}](https://s0.wp.com/latex.php?latex=%7B%7B%5Cbf+R%7D%5B%5Cmathrm%7Bx%7D%5D%7D&bg=ffffff&fg=000000&s=0) . Because

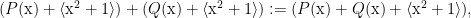

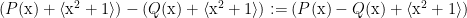

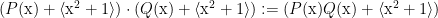

. Because  is an ideal, there is an obvious way to define addition, subtraction, and multiplication in

is an ideal, there is an obvious way to define addition, subtraction, and multiplication in  , namely by setting

, namely by setting

and

and

for all

for all ![{P(\mathrm{x}), Q(\mathrm{x}) \in {\bf R}[\mathrm{x}]} {P(\mathrm{x}), Q(\mathrm{x}) \in {\bf R}[\mathrm{x}]}](https://s0.wp.com/latex.php?latex=%7BP%28%5Cmathrm%7Bx%7D%29%2C+Q%28%5Cmathrm%7Bx%7D%29+%5Cin+%7B%5Cbf+R%7D%5B%5Cmathrm%7Bx%7D%5D%7D&bg=ffffff&fg=000000&s=0) ; these operations, together with the additive identity

; these operations, together with the additive identity  and the multiplicative identity

and the multiplicative identity  , can be easily verified to give

, can be easily verified to give  the structure of a commutative ring. Also, the real line

the structure of a commutative ring. Also, the real line  embeds into

embeds into  by identifying each real number

by identifying each real number  with the coset

with the coset  ; note that this identification is injective, as no real number is a multiple of the polynomial

; note that this identification is injective, as no real number is a multiple of the polynomial  .

.

If we define to be the coset

to be the coset

then it is clear from construction that

then it is clear from construction that  . Thus

. Thus  contains both

contains both  and a solution of the equation

and a solution of the equation  . Also, since every element of

. Also, since every element of  is of the form

is of the form  for some polynomial

for some polynomial ![{P \in {\bf R}[\mathrm{x}]} {P \in {\bf R}[\mathrm{x}]}](https://s0.wp.com/latex.php?latex=%7BP+%5Cin+%7B%5Cbf+R%7D%5B%5Cmathrm%7Bx%7D%5D%7D&bg=ffffff&fg=000000&s=0) , we see that every element of

, we see that every element of  is a polynomial combination

is a polynomial combination  of

of  with real coefficients; in particular, any subring of

with real coefficients; in particular, any subring of  that contains

that contains  and

and  will necessarily have to contain every element of

will necessarily have to contain every element of  . Thus

. Thus  is generated by

is generated by  and

and  .

.

The only remaining thing to verify is that is a field and not just a commutative ring. In other words, we need to show that every non-zero element of

is a field and not just a commutative ring. In other words, we need to show that every non-zero element of  has a multiplicative inverse. This stems from a particular property of the polynomial

has a multiplicative inverse. This stems from a particular property of the polynomial  , namely that it is irreducible in

, namely that it is irreducible in ![{{\bf R}[\mathrm{x}]} {{\bf R}[\mathrm{x}]}](https://s0.wp.com/latex.php?latex=%7B%7B%5Cbf+R%7D%5B%5Cmathrm%7Bx%7D%5D%7D&bg=ffffff&fg=000000&s=0) . That is to say, we cannot factor

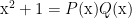

. That is to say, we cannot factor  into non-constant polynomials

into non-constant polynomials

with

with ![{P(\mathrm{x}), Q(\mathrm{x}) \in {\bf R}[\mathrm{x}]} {P(\mathrm{x}), Q(\mathrm{x}) \in {\bf R}[\mathrm{x}]}](https://s0.wp.com/latex.php?latex=%7BP%28%5Cmathrm%7Bx%7D%29%2C+Q%28%5Cmathrm%7Bx%7D%29+%5Cin+%7B%5Cbf+R%7D%5B%5Cmathrm%7Bx%7D%5D%7D&bg=ffffff&fg=000000&s=0) . Indeed, as

. Indeed, as  has degree two, the only possible way such a factorisation could occur is if

has degree two, the only possible way such a factorisation could occur is if  both have degree one, which would imply that the polynomial

both have degree one, which would imply that the polynomial  has a root in the reals

has a root in the reals  , which of course it does not.

, which of course it does not.

Because the polynomial is irreducible, it is also prime: if

is irreducible, it is also prime: if  divides a product

divides a product  of two polynomials in

of two polynomials in ![{{\bf R}[\mathrm{x}]} {{\bf R}[\mathrm{x}]}](https://s0.wp.com/latex.php?latex=%7B%7B%5Cbf+R%7D%5B%5Cmathrm%7Bx%7D%5D%7D&bg=ffffff&fg=000000&s=0) , then it must also divide at least one of the factors

, then it must also divide at least one of the factors  ,

,  . Indeed, if

. Indeed, if  does not divide

does not divide  , then by irreducibility the greatest common divisor of

, then by irreducibility the greatest common divisor of  and

and  is

is  . Applying the Euclidean algorithm for polynomials, we then obtain a representation of

. Applying the Euclidean algorithm for polynomials, we then obtain a representation of  as

as

for some polynomials

for some polynomials  ; multiplying both sides by

; multiplying both sides by  , we conclude that

, we conclude that  is a multiple of

is a multiple of  .

.

Since is prime, the quotient space

is prime, the quotient space ![{{\bf C} = {\bf R}[\mathrm{x}] / \langle \mathrm{x}^2+1 \rangle} {{\bf C} = {\bf R}[\mathrm{x}] / \langle \mathrm{x}^2+1 \rangle}](https://s0.wp.com/latex.php?latex=%7B%7B%5Cbf+C%7D+%3D+%7B%5Cbf+R%7D%5B%5Cmathrm%7Bx%7D%5D+%2F+%5Clangle+%5Cmathrm%7Bx%7D%5E2%2B1+%5Crangle%7D&bg=ffffff&fg=000000&s=0) is an integral domain: there are no zero-divisors in

is an integral domain: there are no zero-divisors in  other than zero. This brings us closer to the task of showing that

other than zero. This brings us closer to the task of showing that  is a field, but we are not quite there yet; note for instance that

is a field, but we are not quite there yet; note for instance that ![{{\bf R}[\mathrm{x}]} {{\bf R}[\mathrm{x}]}](https://s0.wp.com/latex.php?latex=%7B%7B%5Cbf+R%7D%5B%5Cmathrm%7Bx%7D%5D%7D&bg=ffffff&fg=000000&s=0) is an integral domain, but not a field. But one can finish up by using finite dimensionality. As

is an integral domain, but not a field. But one can finish up by using finite dimensionality. As  is a ring containing the field

is a ring containing the field  , it is certainly a vector space over

, it is certainly a vector space over  ; as

; as  is generated by

is generated by  and

and  , and

, and  , we see that it is in fact a two-dimensional vector space over

, we see that it is in fact a two-dimensional vector space over  , spanned by

, spanned by  and

and  (which are linearly independent, as

(which are linearly independent, as  clearly cannot be real). In particular, it is finite dimensional. For any non-zero

clearly cannot be real). In particular, it is finite dimensional. For any non-zero  , the multiplication map

, the multiplication map  is an

is an  -linear map from this finite-dimensional vector space to itself. As

-linear map from this finite-dimensional vector space to itself. As  is an integral domain, this map is injective; by finite-dimensionality, it is therefore surjective (by the rank-nullity theorem). In particular, there exists

is an integral domain, this map is injective; by finite-dimensionality, it is therefore surjective (by the rank-nullity theorem). In particular, there exists  such that

such that  , and hence

, and hence  is invertible and

is invertible and  is a field. This concludes the construction of a complex field

is a field. This concludes the construction of a complex field  .

.

up to isomorphism is a straightforward exercise:

up to isomorphism is a straightforward exercise:

as the

as the

complex field; the other complex fields out there will no longer be of

much importance in this course (or indeed, in most of mathematics), with

one small exception that we will get to later in this section. One can,

if one wishes, use the above abstract algebraic construction![{( {\bf R}[\mathrm{x}] / \langle \mathrm{x}^2+1 \rangle, \mathrm{x} + \langle \mathrm{x}^2 + 1 \rangle)} {( {\bf R}[\mathrm{x}] / \langle \mathrm{x}^2+1 \rangle, \mathrm{x} + \langle \mathrm{x}^2 + 1 \rangle)}](https://s0.wp.com/latex.php?latex=%7B%28+%7B%5Cbf+R%7D%5B%5Cmathrm%7Bx%7D%5D+%2F+%5Clangle+%5Cmathrm%7Bx%7D%5E2%2B1+%5Crangle%2C+%5Cmathrm%7Bx%7D+%2B+%5Clangle+%5Cmathrm%7Bx%7D%5E2+%2B+1+%5Crangle%29%7D&bg=ffffff&fg=000000&s=0) as the choice for “the” complex field

as the choice for “the” complex field  , but one can certainly pick other choices if desired (e.g. the Argand plane construction in Remark 7 below). But in view of Exercise 4, the precise construction of

, but one can certainly pick other choices if desired (e.g. the Argand plane construction in Remark 7 below). But in view of Exercise 4, the precise construction of

is not terribly relevant for the purposes of actually doing complex

analysis, much as the question of whether to construct the real numbers

using Dedekind cuts, equivalence classes of Cauchy sequences, or some

other construction is not terribly relevant for the purposes of actually

doing real analysis. So, from here on out, we will no longer refer to

the precise construction of used; the reader may certainly substitute his or her own favourite construction of

used; the reader may certainly substitute his or her own favourite construction of  in place of

in place of ![{{\bf R}[\mathbf{x}] / \langle {\mathbf x}^2 + 1 \rangle} {{\bf R}[\mathbf{x}] / \langle {\mathbf x}^2 + 1 \rangle}](https://s0.wp.com/latex.php?latex=%7B%7B%5Cbf+R%7D%5B%5Cmathbf%7Bx%7D%5D+%2F+%5Clangle+%7B%5Cmathbf+x%7D%5E2+%2B+1+%5Crangle%7D&bg=ffffff&fg=000000&s=0) if desired, with essentially no change to the rest of the lecture notes.

if desired, with essentially no change to the rest of the lecture notes.

of the form

of the form  for

for  real are known as purely imaginary numbers;

real are known as purely imaginary numbers;

the terminology is colourful, but despite the name, imaginary numbers

have precisely the same first-class mathematical object status as real

numbers. If is a complex number, the real components

is a complex number, the real components  of

of  are known as the real part

are known as the real part  and imaginary part

and imaginary part  of

of  respectively. Complex numbers that are not real are occasionally referred to as strictly complex numbers. In the complex plane, the set

respectively. Complex numbers that are not real are occasionally referred to as strictly complex numbers. In the complex plane, the set  of real numbers forms the real axis, and the set

of real numbers forms the real axis, and the set  of imaginary numbers forms the imaginary axis. Traditionally, elements of

of imaginary numbers forms the imaginary axis. Traditionally, elements of  are denoted with symbols such as

are denoted with symbols such as  ,

,  , or

, or  , while symbols such as

, while symbols such as  are typically intended to represent real numbers instead.

are typically intended to represent real numbers instead.

to a non-negative integer

to a non-negative integer  by declaring inductively

by declaring inductively  and

and  for

for  ; in particular we adopt the usual convention that

; in particular we adopt the usual convention that  (when thinking of the base

(when thinking of the base  as a complex number, and the exponent

as a complex number, and the exponent  as a non-negative integer). For negative integers

as a non-negative integer). For negative integers  , we define

, we define  for non-zero

for non-zero  ; we leave

; we leave  undefined when

undefined when  is zero and

is zero and  is negative. At the present time we do not attempt to define

is negative. At the present time we do not attempt to define  for any exponent

for any exponent

other than an integer; we will return to such exponentiation operations

later in the course, though we will at least define the complex

exponential for any complex

for any complex  later in this set of notes.

later in this set of notes.

By definition, a complex field is a field

is a field  together with a root

together with a root  of the equation

of the equation  . But if

. But if  is a root of the equation

is a root of the equation  , then so is

, then so is  (indeed, from the factorisation

(indeed, from the factorisation  we see that these are the only two roots of this quadratic equation. Thus we have another complex field

we see that these are the only two roots of this quadratic equation. Thus we have another complex field  which differs from

which differs from  only in the choice of root

only in the choice of root  . By Exercise 4, there is a unique field isomorphism from

. By Exercise 4, there is a unique field isomorphism from  to

to  that maps

that maps  to

to  (i.e. a complex field isomorphism from

(i.e. a complex field isomorphism from  to

to  ); this operation is known as complex conjugation and is denoted

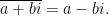

); this operation is known as complex conjugation and is denoted  . In coordinates, we have

. In coordinates, we have

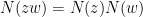

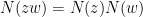

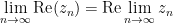

Being a field isomorphism, we have in particular that

Being a field isomorphism, we have in particular that

and

and

for all complex numbers

for all complex numbers  . It is also clear that complex conjugation fixes the real numbers, and only the real numbers:

. It is also clear that complex conjugation fixes the real numbers, and only the real numbers:  if and only if

if and only if

is real. Geometrically, complex conjugation is the operation of

reflection in the complex plane across the real axis. It is clearly an involution in the sense that it is its own inverse:

One can also relate the real and imaginary parts to complex conjugation via the identities

One can also relate the real and imaginary parts to complex conjugation via the identities

by its complex conjugate

by its complex conjugate  , we obtain a quantity

, we obtain a quantity  which is invariant with respect to conjugation (i.e.

which is invariant with respect to conjugation (i.e.  ) and is therefore real. The map

) and is therefore real. The map  produced this way is known in field theory as the norm form of

produced this way is known in field theory as the norm form of  over

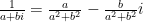

over  ; it is clearly multiplicative in the sense that

; it is clearly multiplicative in the sense that  , and is only zero when

, and is only zero when  is zero. It can be used to link multiplicative inversion with complex conjugation, in that we clearly have

is zero. It can be used to link multiplicative inversion with complex conjugation, in that we clearly have

for any non-zero complex number . In coordinates, we have

. In coordinates, we have

(thus recovering, by the way, the inversion formula

(thus recovering, by the way, the inversion formula  implicit in Remark 7). In coordinates, the multiplicativity

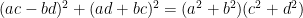

implicit in Remark 7). In coordinates, the multiplicativity  takes the form of Lagrange’s identity

takes the form of Lagrange’s identity

of the complex numbers has the feature of being positive definite:

of the complex numbers has the feature of being positive definite:  is always non-negative (and strictly positive when

is always non-negative (and strictly positive when  is non-zero). This is a feature that is somewhat special to the complex numbers; for instance, the quadratic extension

is non-zero). This is a feature that is somewhat special to the complex numbers; for instance, the quadratic extension  of the rationals

of the rationals  by

by  has the norm form

has the norm form  , which is indefinite. One can view this positive definiteness of the norm form as the one remaining vestige in

, which is indefinite. One can view this positive definiteness of the norm form as the one remaining vestige in  of the order structure

of the order structure

on the reals, which as remarked previously is no longer present

directly in the complex numbers. (One can also view the positive

definiteness of the norm form as a consequence of the topological

connectedness of the punctured complex plane : the norm form is positive at

: the norm form is positive at  , and cannot change sign anywhere in

, and cannot change sign anywhere in  , so is forced to be positive on the rest of this connected region.)

, so is forced to be positive on the rest of this connected region.)

One consequence of positive definiteness is that the bilinear form

becomes a positive definite inner product on

becomes a positive definite inner product on  (viewed as a vector space over

(viewed as a vector space over  ). In particular, this turns the complex numbers into an inner product space over the reals. From the usual theory of inner product spaces, we can then construct a norm

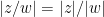

). In particular, this turns the complex numbers into an inner product space over the reals. From the usual theory of inner product spaces, we can then construct a norm

(thus, the norm is the square root of the norm form) which obeys the triangle inequality

(thus, the norm is the square root of the norm form) which obeys the triangle inequality

(which implies the usual permutations of this inequality, such as ), and from the multiplicativity of the norm form we also have

), and from the multiplicativity of the norm form we also have

(and hence also if

if  is non-zero) and from the involutive nature of complex conjugation we have

is non-zero) and from the involutive nature of complex conjugation we have

The norm clearly extends the absolute value operation

clearly extends the absolute value operation  on the real numbers, and so we also refer to the norm

on the real numbers, and so we also refer to the norm  of a complex number

of a complex number  as its absolute value or magnitude. In coordinates, we have

as its absolute value or magnitude. In coordinates, we have

thus for instance , and from (6) we also immediately have the useful inequalities

, and from (6) we also immediately have the useful inequalities

As with any other normed vector space, the norm defines a metric on the complex numbers via the definition

defines a metric on the complex numbers via the definition

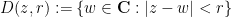

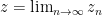

Note that using the Argand plane representation of

Note that using the Argand plane representation of  as

as  that this metric coincides with the usual Euclidean metric on

that this metric coincides with the usual Euclidean metric on  . This metric in turn defines a topology on

. This metric in turn defines a topology on  (generated in the usual manner by the open disks

(generated in the usual manner by the open disks  ),

),

which in turn generates all the usual topological notions such as the

concept of an open set, closed set, compact set, connected set, and

boundary of a set; the notion of a limit of a sequence ; the notion of a continuous map, and so forth. For instance, a sequence

; the notion of a continuous map, and so forth. For instance, a sequence  of complex numbers converges to a limit

of complex numbers converges to a limit  if

if  as

as  , and a map

, and a map  is continuous if one has

is continuous if one has  whenever

whenever  ,

,

or equivalently if the inverse image of any open set is open. Again,

using the Argand plane representation, these notions coincide with their

counterparts on the Euclidean plane .

.

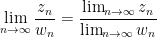

As usual, if a sequence of complex numbers converges to a limit

of complex numbers converges to a limit  , we write

, we write  . From the triangle inequality (3) and the multiplicativity (4) we see that the addition operation

. From the triangle inequality (3) and the multiplicativity (4) we see that the addition operation  , subtraction operation

, subtraction operation  , and multiplication operation

, and multiplication operation  , thus we have the familiar limit laws

, thus we have the familiar limit laws

and

and

whenever the limits on the right-hand side exist. Similarly, from (5) we see that complex conjugation is an isometry of the complex numbers, thus

whenever the limits on the right-hand side exist. Similarly, from (5) we see that complex conjugation is an isometry of the complex numbers, thus

when the limit on the right-hand side exists. As a consequence, the norm form

when the limit on the right-hand side exists. As a consequence, the norm form  and the absolute value

and the absolute value  are also continuous, thus

are also continuous, thus

whenever the limit on the right-hand side exists. Using the formula (2)

whenever the limit on the right-hand side exists. Using the formula (2)

for the reciprocal of a complex number, we also see that division is a

continuous operation as long as the denominator is non-zero, thus

as long as the limits on the right-hand side exist, and the limit in the denominator is non-zero.

as long as the limits on the right-hand side exist, and the limit in the denominator is non-zero.

From (7) we see that

in particular

in particular

and

and

whenever the limit on the right-hand side exists. One consequence of this is that

whenever the limit on the right-hand side exists. One consequence of this is that  is complete: every sequence

is complete: every sequence  of complex numbers that is a Cauchy sequence (thus

of complex numbers that is a Cauchy sequence (thus  as

as  ) converges to a unique complex limit

) converges to a unique complex limit  . (As such, one can view the complex numbers as a (very small) example of a Hilbert space.)

. (As such, one can view the complex numbers as a (very small) example of a Hilbert space.)

As with the reals, we have the fundamental fact that any formal series of complex numbers which is absolutely convergent, in the sense that the non-negative series

of complex numbers which is absolutely convergent, in the sense that the non-negative series  is finite, is necessarily convergent to some complex number

is finite, is necessarily convergent to some complex number  , in the sense that the partial sums

, in the sense that the partial sums  converge to

converge to  as

as  . This is because the triangle inequality ensures that the partial sums are a Cauchy sequence. As usual we write

. This is because the triangle inequality ensures that the partial sums are a Cauchy sequence. As usual we write  to denote the assertion that

to denote the assertion that  is the limit of the partial sums

is the limit of the partial sums  .

.

We will occasionally have need to deal with series that are only

conditionally convergent rather than absolutely convergent, but in most

of our applications the only series we will actually evaluate are the

absolutely convergent ones. Many of the limit laws imply analogues for

series, thus for instance

whenever the series on the right-hand side is absolutely convergent

whenever the series on the right-hand side is absolutely convergent

(or even just convergent). We will not write down an exhaustive list of

such series laws here.

An important role in complex analysis is played by the unit circle

In coordinates, this is the set of points

In coordinates, this is the set of points  for which

for which  ,

,

and so this indeed has the geometric structure of a unit circle.

Elements of the unit circle will be referred to in these notes as phases. Every non-zero complex number has a unique polar decomposition as

has a unique polar decomposition as  where

where  is a positive real and

is a positive real and  lies on the unit circle

lies on the unit circle  . Indeed, it is easy to see that this decomposition is given by

. Indeed, it is easy to see that this decomposition is given by  and

and  , and that this is the only polar decopmosition of

, and that this is the only polar decopmosition of  . We refer to the polar components

. We refer to the polar components  and

and  of a non-zero complex number

of a non-zero complex number  as the magnitude and phase of

as the magnitude and phase of  respectively.

respectively.

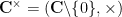

From (4) we see that the unit circle is a multiplicative group; it contains the multiplicative identity

is a multiplicative group; it contains the multiplicative identity  , and if

, and if  lie in

lie in  , then so do

, then so do  and

and  . From (2) we see that reciprocation and complex conjugation agree on the unit circle, thus

. From (2) we see that reciprocation and complex conjugation agree on the unit circle, thus

for

for  . It is worth emphasising that this useful identity does not hold as soon as one leaves the unit circle, in which case one must use the more general formula (2) instead! If

. It is worth emphasising that this useful identity does not hold as soon as one leaves the unit circle, in which case one must use the more general formula (2) instead! If  are non-zero complex numbers

are non-zero complex numbers  with polar decompositions

with polar decompositions  and

and  respectively, then clearly the polar decompositions of

respectively, then clearly the polar decompositions of  and

and  are given by

are given by  and

and

respectively. Thus polar coordinates are very convenient for performing

complex multiplication, although they turn out to be atrocious for

performing complex addition. (This can be contrasted with the usual

Cartesian coordinates ,

,

which are very convenient for performing complex addition and mildly

inconvenient for performing complex multiplication.) In the language of

group theory, the polar decomposition splits the multiplicative complex

group as the direct product of the positive reals

as the direct product of the positive reals  and the unit circle

and the unit circle  :

:  .

.

If is an element of the unit circle

is an element of the unit circle  , then from (4) we see that the operation

, then from (4) we see that the operation  of multiplication by

of multiplication by  is an isometry of

is an isometry of  , in the sense that

, in the sense that

for all complex numbers

for all complex numbers  . This isometry also preserves the origin

. This isometry also preserves the origin  . As such, it is geometrically obvious (see Exercise 11 below) that the map

. As such, it is geometrically obvious (see Exercise 11 below) that the map

must either be a rotation around the origin, or a reflection around a

line. The former operation is orientation preserving, and the latter is

orientation reversing. Since the map is clearly orientation preserving when

is clearly orientation preserving when  , and the unit circle

, and the unit circle  is connected, a continuity argument shows that

is connected, a continuity argument shows that  must be orientation preserving for all

must be orientation preserving for all  , and so must be a rotation around the origin by some angle. Of course, by trigonometry, we may write

, and so must be a rotation around the origin by some angle. Of course, by trigonometry, we may write

for some real number

for some real number  . The rotation

. The rotation  clearly maps the number

clearly maps the number  to the number

to the number  , and so the rotation must be a counter-clockwise rotation by

, and so the rotation must be a counter-clockwise rotation by  (adopting the usual convention of placing

(adopting the usual convention of placing  to the right of the origin and

to the right of the origin and  above it). In particular, when applying this rotation

above it). In particular, when applying this rotation  to another point

to another point  on the unit circle, this point must get rotated to

on the unit circle, this point must get rotated to  . We have thus given a geometric proof of the multiplication formula

. We have thus given a geometric proof of the multiplication formula

taking real and imaginary parts, we recover the familiar trigonometric addition formulae

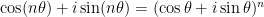

We can also iterate the multiplication formula to give de Moivre’s formula

We can also iterate the multiplication formula to give de Moivre’s formula

for any natural number

for any natural number  (or indeed for any integer

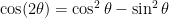

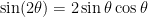

(or indeed for any integer  ), which can in turn be used to recover familiar identities such as the double angle formulae

), which can in turn be used to recover familiar identities such as the double angle formulae

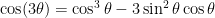

or triple angle formulae

or triple angle formulae

after expanding out de Moivre’s formula for

after expanding out de Moivre’s formula for  or

or  and taking real and imaginary parts.

and taking real and imaginary parts.

can now be written in polar form as

can now be written in polar form as

with and

and  ; we refer to

; we refer to  as an argument of

as an argument of  ,

,

and can be interpreted as an angle of counterclockwise rotation needed

to rotate the positive real axis to a position that contains . The argument is not quite unique, due to the periodicity of sine and cosine: if

. The argument is not quite unique, due to the periodicity of sine and cosine: if  is an argument of

is an argument of  , then so is

, then so is  for any integer

for any integer  , and conversely these are all the possible arguments that

, and conversely these are all the possible arguments that  can have. The set of all such arguments will be denoted

can have. The set of all such arguments will be denoted  ; it is a coset of the discrete group

; it is a coset of the discrete group  , and can thus be viewed as an element of the

, and can thus be viewed as an element of the  -torus

-torus  .

.

The operation of multiplying a complex number

of multiplying a complex number  by a given non-zero complex number

by a given non-zero complex number  now has a very appealing geometric interpretation when expressing

now has a very appealing geometric interpretation when expressing  in polar coordinates (9): this operation is the composition of the operation of dilation by

in polar coordinates (9): this operation is the composition of the operation of dilation by  around the origin, and counterclockwise rotation by

around the origin, and counterclockwise rotation by  around the origin. For instance, multiplication by

around the origin. For instance, multiplication by  performs a counter-clockwise rotation by

performs a counter-clockwise rotation by  around the origin, while multiplication by

around the origin, while multiplication by  performs instead a clockwise rotation by

performs instead a clockwise rotation by  .

.

As complex multiplication is commutative and associative, it does not

matter in which order one performs the dilation and rotation operations.

Similarly, using Cartesian coordinates, we see that the operation of adding a complex number

of adding a complex number  by a given complex number

by a given complex number  is simply a spatial translation by a displacement of

is simply a spatial translation by a displacement of  . The multiplication operation need not be isometric (due to the presence of the dilation

. The multiplication operation need not be isometric (due to the presence of the dilation  ), but observe that both the addition and multiplication operations are conformal

), but observe that both the addition and multiplication operations are conformal

(angle-preserving) and also orientation-preserving (a counterclockwise

loop will transform to another counterclockwise loop, and similarly for

clockwise loops). As we shall see later, these conformal and

orientation-preserving properties of the addition and multiplication

maps will extend to the larger class of complex differentiable maps (at least outside of critical points of the map), and are an important aspect of the geometry of such maps.

of a non-zero complex number

of a non-zero complex number  to lie in a fundamental domain of the

to lie in a fundamental domain of the  -torus

-torus  , such as the half-open interval

, such as the half-open interval  or

or  , in order to recover a unique parameterisation (at the cost of creating a branch cut at one point of the unit circle). Traditionally, the fundamental domain that is most often used is the half-open interval

, in order to recover a unique parameterisation (at the cost of creating a branch cut at one point of the unit circle). Traditionally, the fundamental domain that is most often used is the half-open interval  . The unique argument of

. The unique argument of  that lies in this interval is called the standard argument of

that lies in this interval is called the standard argument of  and is denoted

and is denoted  , and

, and  is called the standard branch of the argument function. Thus for instance

is called the standard branch of the argument function. Thus for instance  ,

,  ,

,  , and

, and  . Observe that the standard branch of the argument has a discontinuity on the negative real axis

. Observe that the standard branch of the argument has a discontinuity on the negative real axis  , which is the branch cut

, which is the branch cut

of this branch. Changing the fundamental domain used to define a branch

of the argument can move the branch cut around, but cannot eliminate it

completely, due to non-trivial monodromy

(if one continuously loops once counterclockwise around the origin, and

varies the argument continuously as one does so, the argument will

increment by , and so no branch of the argument function can be continuous at every point on the loop).

, and so no branch of the argument function can be continuous at every point on the loop).

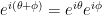

The multiplication formula (8) resembles the multiplication formula

for the real exponential function . The two formulae can be unified through the famous Euler formula involving the complex exponential

. The two formulae can be unified through the famous Euler formula involving the complex exponential  . There are many ways to define the complex exponential. Perhaps the most natural is through the ordinary differential equation

. There are many ways to define the complex exponential. Perhaps the most natural is through the ordinary differential equation  with boundary condition

with boundary condition  .

.

However, as we have not yet set up a theory of complex differentiation,

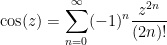

we will proceed (at least temporarily) through the device of Taylor series. Recalling that the real exponential function has the Taylor expansion

has the Taylor expansion

which is absolutely convergent for any real

which is absolutely convergent for any real  , one is led to define the complex exponential function

, one is led to define the complex exponential function  by the analogous expansion

by the analogous expansion

noting from (4) that the absolute convergence of the real exponential

noting from (4) that the absolute convergence of the real exponential  for any

for any  implies the absolute convergence of the complex exponential for any

implies the absolute convergence of the complex exponential for any  . We also frequently write

. We also frequently write  for

for  . The multiplication formula (10) for the real exponential extends to the complex exponential:

. The multiplication formula (10) for the real exponential extends to the complex exponential:

with the familiar Taylor expansions

with the familiar Taylor expansions

and

and

for the (real) sine and cosine functions, one obtains Euler formula

for the (real) sine and cosine functions, one obtains Euler formula

for any real number ; in particular we have the famous identities

; in particular we have the famous identities

and

We now see that the multiplication formula (8) can be written as a special form

of (12); similarly, de Moivre’s formula takes the simple and intuitive form

of (12); similarly, de Moivre’s formula takes the simple and intuitive form

From (12) and (13) we also see that the exponential function basically transforms Cartesian coordinates to polar coordinates:

From (12) and (13) we also see that the exponential function basically transforms Cartesian coordinates to polar coordinates:

Later on in the course we will study (the various branches of) the

Later on in the course we will study (the various branches of) the

logarithm function that inverts the complex exponential, thus converting

polar coordinates back to Cartesian ones.

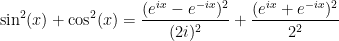

From (13) and (1), together with the easily verified identity

we see that we can recover the trigonometric functions

we see that we can recover the trigonometric functions  from the complex exponential by the formulae

from the complex exponential by the formulae

(Indeed, if one wished, one could take these identities as the definition

of the sine and cosine functions, giving a purely analytic way to

construct these trigonometric functions.) From these identities one can

derive all the usual trigonometric identities from the basic properties

of the exponential (and in particular (12)). For instance, using a little bit of high school algebra we can prove the familiar identity

from (16):

from (16):

Thus, in principle at least, one no longer has a need to memorize

Thus, in principle at least, one no longer has a need to memorize

all the different trigonometric identities out there, since they can now

all be unified as consequences of just a handful of basic identities

for the complex exponential, such as (12), (14), and (15).

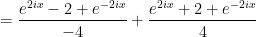

In view of (16), it is now natural to introduce the complex sine and cosine functions and

and  by the formula

by the formula

These complex trigonometric functions no longer

have a direct trigonometric interpretation (as one cannot easily

develop a theory of complex angles), but they still inherit almost all

of the algebraic properties of their real-variable counterparts. For

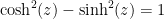

instance, one can repeat the above high school algebra computations verbatim to conclude that

for all . (We caution however that this does not imply that

. (We caution however that this does not imply that  and

and  are bounded in magnitude by

are bounded in magnitude by  – note carefully the lack of absolute value signs outside of

– note carefully the lack of absolute value signs outside of  and

and  in the above formula! See also Exercise 16

in the above formula! See also Exercise 16

below.) Similarly for all of the other trigonometric identities. (Later

on in this series of lecture notes, we will develop the concept of analytic continuation, which can explain why so many real-variable algebraic identities naturally extend to their complex counterparts.) From (11)

we see that the complex sine and cosine functions have the same Taylor

series expansion as their real-variable counterparts, namely

and

and

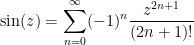

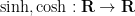

The formulae (17) for the complex sine and cosine functions greatly resemble those of the hyperbolic trigonometric functions

The formulae (17) for the complex sine and cosine functions greatly resemble those of the hyperbolic trigonometric functions  , defined by the formulae

, defined by the formulae

Indeed, if we extend these functions to the complex domain by defining

Indeed, if we extend these functions to the complex domain by defining  to be the functions

to be the functions

then on comparison with (17) we obtain the complex identities

then on comparison with (17) we obtain the complex identities

or equivalently

for all complex .

.

Thus we see that once we adopt the perspective of working over the

complex numbers, the hyperbolic trigonometric functions are “rotations

by 90 degrees” of the ordinary trigonometric functions; this is a simple

example of what physicists call a Wick rotation.

In particular, we see from these identities that any trigonometric

identity will have a hyperbolic counterpart, though due to the presence

of various factors of , the signs may change as one passes from trigonometric to hyperbolic functions or vice versa (a fact quantified by Osborne’s rule). For instance, by substituting (19) or (20) into (18) (and replacing

, the signs may change as one passes from trigonometric to hyperbolic functions or vice versa (a fact quantified by Osborne’s rule). For instance, by substituting (19) or (20) into (18) (and replacing  by

by  or

or  as appropriate), we end up with the analogous identity

as appropriate), we end up with the analogous identity

for the hyperbolic trigonometric functions. Similarly for all other

for the hyperbolic trigonometric functions. Similarly for all other

trigonometric identities. Thus we see that the complex exponential

single-handedly unites the trigonometry, hyperbolic trigonometry, and

the real exponential function into a single coherent theory!

.

.

246A, Notes 0: the complex numbers

18 September, 2016 in 246A - complex analysis, math.CV, math.RA | Tags: complex numbers, exponentiation, trigonometry

numbers; all else is the work of man”. The truth of this statement

(literal or otherwise) is debatable; but one can certainly view the

other standard number systems

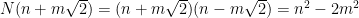

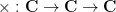

- The integers

are the additive completion of the natural numbers

(the minimal additive group that contains a copy of

).

- The rationals

are the multiplicative completion of the integers

(the minimal field that contains a copy of

).

- The reals

are the metric completion of the rationals

(the minimal complete metric space that contains a copy of

).

- The complex numbers

are the algebraic completion of the reals

(the minimal algebraically closed field that contains a copy of

).

in place of metric spaces.) The definition of the complex numbers as

the algebraic completion of the reals does not suffer from such a

non-circularity issue, but a certain amount of field theory is required

to work with this definition initially. For the purposes of quickly

constructing the complex numbers, it is thus more traditional to first

define

The two equivalent definitions of

– as the algebraic closure, and as a quadratic extension, of the reals

respectively – each reveal important features of the complex numbers in

applications. Because

is algebraically closed, all polynomials over the complex numbers split

completely, which leads to a good spectral theory for both

finite-dimensional matrices and infinite-dimensional operators; in

particular, one expects to be able to diagonalise most matrices and

operators. Applying this theory to constant coefficient ordinary

differential equations leads to a unified theory of such solutions, in

which real-variable ODE behaviour such as exponential growth or decay,

polynomial growth, and sinusoidal oscillation all become aspects of a

single object, the complex exponential

Applying this theory more generally to diagonalise arbitrary

translation-invariant operators over some locally compact abelian group,

one arrives at Fourier analysis,

which is thus most naturally phrased in terms of complex-valued

functions rather than real-valued ones. If one drops the assumption that

the underlying group is abelian, one instead discovers the

representation theory of unitary representations,

which is simpler to study than the real-valued counterpart of

orthogonal representations. For closely related reasons, the theory of

complex Lie groups is simpler than that of real Lie groups.

Meanwhile, the fact that the complex numbers are a quadratic extension

of the reals lets one view the complex numbers geometrically as a

two-dimensional plane over the reals (the Argand plane).

Whereas a point singularity in the real line disconnects that line, a

point singularity in the Argand plane leaves the rest of the plane

connected (although, importantly, the punctured plane is no longer simply connected).

As we shall see, this fact causes singularities in complex analytic

functions to be better behaved than singularities of real analytic

functions, ultimately leading to the powerful residue calculus

for computing complex integrals. Remarkably, this calculus, when

combined with the quintessentially complex-variable technique of contour shifting, can also be used to compute some (though certainly not all) definite integrals of real-valued

functions that would be much more difficult to compute by purely

real-variable methods; this is a prime example of Hadamard’s famous

dictum that “the shortest path between two truths in the real domain

passes through the complex domain”.

Another important geometric feature of the Argand plane is the angle

between two tangent vectors to a point in the plane. As it turns out,

the operation of multiplication by a complex scalar preserves the

magnitude and orientation of such angles; the same fact is true for any

non-degenerate complex analytic mapping, as can be seen by performing a

Taylor expansion to first order. This fact ties the study of complex

mappings closely to that of the conformal geometry

of the plane (and more generally, of two-dimensional surfaces and

domains). In particular, one can use complex analytic maps to

conformally transform one two-dimensional domain to another, leading

among other things to the famous Riemann mapping theorem, and to the classification of Riemann surfaces.

If one Taylor expands complex analytic maps to second order rather than

first order, one discovers a further important property of these maps,

namely that they are harmonic.

This fact makes the class of complex analytic maps extremely rigid and

well behaved analytically; indeed, the entire theory of elliptic PDE now

comes into play, giving useful properties such as elliptic regularity and the maximum principle.

In fact, due to the magic of residue calculus and contour shifting, we

already obtain these properties for maps that are merely complex

differentiable rather than complex analytic, which leads to the striking

fact that complex differentiable functions are automatically analytic

(in contrast to the real-variable case, in which real differentiable

functions can be very far from being analytic).

The geometric structure of the complex numbers (and more generally of

complex manifolds and complex varieties), when combined with the

algebraic closure of the complex numbers, leads to the beautiful subject

of complex algebraic geometry, which motivates the much more

general theory developed in modern algebraic geometry. However, we will

not develop the algebraic geometry aspects of complex analysis here.

Last, but not least, because of the good behaviour of Taylor series in

the complex plane, complex analysis is an excellent setting in which to

manipulate various generating functions, particularly Fourier series

and the complex analytic behaviour of the Dirichlet or Fourier series,

particularly with regard to its poles and other singularities. This

turns out to be a particularly handy dictionary in analytic number theory, for instance relating the distribution of the primes to the Riemann zeta function. Nowadays, many of the analytic number theory results first obtained through complex analysis (such as the prime number theorem)

can also be obtained by more “real-variable” methods; however the

complex-analytic viewpoint is still extremely valuable and illuminating.

We will frequently touch upon many of these connections to other fields

of mathematics in these lecture notes. However, these are mostly side

remarks intended to provide context, and it is certainly possible to

skip most of these tangents and focus purely on the complex analysis

material in these notes if desired.

Note: complex analysis is a very visual subject, and one should draw

plenty of pictures while learning it. I am however not planning to put

too many pictures in these notes, partly as it is somewhat inconvenient

to do so on this blog from a technical perspective, but also because

pictures that one draws on one’s own are likely to be far more useful to

you than pictures that were supplied by someone else.

— 1. The construction and algebra of the complex numbers —

Note: this section will be far more algebraic in nature than the rest ofthe course; we are concentrating almost all of the algebraic

preliminaries in this section in order to get them out of the way and

focus subsequently on the analytic aspects of the complex numbers.

Thanks to the laws of high-school algebra, we know that the real numbers

it is endowed with the arithmetic operations of addition, subtraction,

multiplication, and division, as well as the additive identity

The algebraic structure of the reals does have one drawback though – not

all (non-trivial) polynomials have roots! Most famously, the polynomial

equation

As mentioned in the introduction, one traditional way to define the complex numbers

Definition 1 (The complex numbers) A field of complex numbers is a field(We will take the existence of the real numbersthat contains the real numbers

as a subfield, as well as a root

of the equation

. (Thus, strictly speaking, a field of complex numbers is a pair

, but we will almost always abuse notation and use

as a metonym for the pair

.) Furthermore,

is generated by

and

, in the sense that there is no subfield of

, other than

itself, that contains both

and

; thus, in the language of field extensions, we have

.

as a given in this course; constructions of the real number system can

of course be found in many real analysis texts, including my own.)

Definition 1 is short, but proposing it as a definition of the complex numbers raises some immediate questions:

- (Existence) Does such a field

even exist?

- (Uniqueness) Is such a field

unique (up to isomorphism)?

- (Non-arbitrariness) Why the square root of

? Why not adjoin instead, say, a fourth root of

, or the solution to some other algebraic equation? Also, could one iterate the process, extending

further by adding more roots of equations?

We begin with existence. One can construct the complex numbers quite

explicitly and quickly using the Argand plane construction; see Remark 7

below. However, from the perspective of higher mathematics, it is more

natural to view the construction of the complex numbers as a special

case of the more general algebraic construction that can extend any

field

Starting with the real numbers

as a real variable; but if one were to generalise this construction to

positive characteristic fields, and particularly finite fields, then one

can run into difficulties if polynomials are not treated formally, due

to the fact that two distinct formal polynomials might agree on all

inputs in a given finite field (e.g. the polynomials

of polynomials has a pretty good algebraic structure, in particular the

usual operations of addition, subtraction, and multiplication on

polynomials, together with the zero polynomial

If a unital commutative ring fails to be field, then it will instead possess a number of non-trivial ideals. The only ideal we will need to consider here is the principal ideal

We now define

If we define

The only remaining thing to verify is that

Because the polynomial

Since

Remark 2 One can think of the action of passing from a ringto a quotient

by some ideal

as the action of forcing some relations to hold between the various elements of

, by requiring all the elements of the ideal

(or equivalently, all the generators of

) to vanish. Thus one can think of

as the ring formed by adjoining a new element

to the existing ring

and then demanding the constraint

.

With this perspective, the main issues to check in order to obtain a

complex field are firstly that these relations do not collapse the ring

so much that two previously distinct elements of

become equal, and secondly that all the non-zero elements become

invertible once the relations are imposed, so that we obtain a field

rather than merely a ring or integral domain.

Remark 3 It is instructive to compare the complex fieldUniqueness of, formed by adjoining the square root of

to the reals, with other commutative rings such as the dual numbers

(which adjoins an additional square root of

to the reals) or the split complex numbers

(which adjoins a new root of

to the reals). The latter two objects are perfectly good rings, but are

not fields (they contain zero divisors, and the first ring even

contains a nilpotent). This is ultimately due to the reducible nature of

the polynomialsand

in

.

Exercise 4 (Uniqueness up to isomorphism) Suppose that one has two complex fieldsNow that we have existence and uniqueness up to isomorphism, it is safe to designate one of the complex fieldsand

. Show that there is a unique field isomorphism

that maps

to

and is the identity on

.

complex field; the other complex fields out there will no longer be of

much importance in this course (or indeed, in most of mathematics), with

one small exception that we will get to later in this section. One can,

if one wishes, use the above abstract algebraic construction

is not terribly relevant for the purposes of actually doing complex

analysis, much as the question of whether to construct the real numbers

using Dedekind cuts, equivalence classes of Cauchy sequences, or some

other construction is not terribly relevant for the purposes of actually

doing real analysis. So, from here on out, we will no longer refer to

the precise construction of

Exercise 5 Letbe an arbitrary field, let

be the ring of polynomials with coefficients in

, and let

be an irreducible polynomial in

of degree at least two. Show that

is a field containing an embedded copy of

, as well as a root

of the equation

, and that this field is generated by

and

. Also show that all such fields are unique up to isomorphism. (This field

is an example of a field extension of

,

the further study of which can be found in any advanced undergraduate

or early graduate text on algebra, and is the starting point in

particular for the beautiful topic of Galois theory, which we will not discuss here.)

Exercise 6 Letbe an arbitrary field. Show that every non-constant polynomial

in

can be factored as the product

of irreducible non-constant polynomials. Furthermore show that this factorisation is unique up to permutation of the factors

,

and multiplication of each of the factors by a constant (with the

product of all such constants being one). In other words: the

polynomiail ringis a unique factorisation domain.

Remark 7 (Real and imaginary coordinates) As a complex fieldis spanned (over

) by the linearly independent elements

and

, we can write

with each elementof

having a unique representation of the form

, thus

for real. The addition, subtraction, and multiplication operations can then be written down explicitly in these coordinates as

and with a bit more work one can compute the division operation as

if. One could take these coordinate representations as the definition of the complex field

and its basic arithmetic operations, and this is indeed done in many

texts introducing the complex numbers. In particular, one could take the

Argand planeas the choice of complex field, where we identify each point

in

with

(so for instance

becomes endowed with the multiplication operation

).

This is a very concrete and direct way to construct the complex

numbers; the main drawback is that it is not immediately obvious that

the field axioms are all satisfied. For instance, the associativity of

multiplication is rather tedious to verify in the coordinates of the

Argand plane. In contrast, the more abstract algebraic construction of

the complex numbers given above makes it more evident what the source of

the field structure onis, namely the irreducibility of the polynomial

.

Remark 8 Because of the Argand plane construction, we will sometimes refer to the spaceElements ofof complex numbers as the complex plane. We should warn, though, that in some areas of mathematics, particularly in algebraic geometry,

is viewed as a one-dimensional complex vector space (or a one-dimensional complex manifold or complex variety), and so

is sometimes referred to in those cases as a complex line. (Similarly, Riemann surfaces, which from a real point of view are two-dimensional surfaces, can sometimes be referred to as complex curves in the literature; the modular curve

is a famous instance of this.) In this current course, though, the

topological notion of dimension turns out to be more important than the

algebraic notions of dimension, and as such we shall generally refer toas a plane rather than a line.

the terminology is colourful, but despite the name, imaginary numbers

have precisely the same first-class mathematical object status as real

numbers. If

Remark 9 We noted earlier that the equationAs with any other field, we can raise a complex numberhad no solutions in the reals because

was always positive. In other words, the properties of the order relation

on

prevented the existence of a root for the equation

. As

does have a root for

, this means that the complex numbers

cannot be ordered in the same way that the reals are ordered (that is

to say, being totally ordered, with the positive numbers closed under

both addition and multiplication). Indeed, one usually refrains from

putting any order structure on the complex numbers, so that statements

such asfor complex numbers

are left undefined (unless

are real, in which case one can of course use the real ordering). In particular, the complex number

is considered to be neither positive nor negative, and an assertion such as